Project Description

The traditional approach to assessing spoken English is to have a

well-trained human assessor listen to the test - either live or

recorded - and mark the performance on a standardised scale. There are

two main problems with this. First the process is highly expensive as

it requires the training of an assessor. Secondly the process is not

scaleable to large numbers of candidates. There is considerable

interest in automating this approach to address these problems. The

goal of this project is to develop techniques to automatically

evaluate oral communication skills in collaboration with Cambridge

University ESOL. The project will make use of state-of-the-art

automatic speech recognition (ASR) approaches to provide

transcriptions and features that characterise the communications

skills of the candidate.

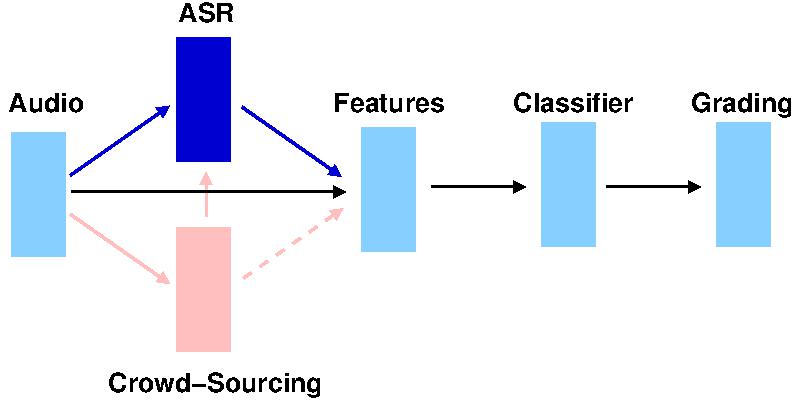

The figure above describes an overview of the approaches that will be

taken.

Specific areas that may be examined include:

-

adapting a speech recognition system to non-native speakers;

-

automatic correction of crowd-sourced transcriptions;

-

use of 'crowd-sourced' transcriptions to train speech recognition systems;

-

extracting features from transcriptions (either from the ASR system or crowd-sourced) for assessing English;

-

designing a classifier given a set of features for spoken English assessment as a second language.

There is funding available on this project for short-term contracts or studentships at Cambridge University. If you

are interested please contact Prof Mark Gales.

Personnel Associated with the Project

Past members

- Dr Kai Yu [Senior Research Associate]

- Zhi Chen Neo [UROP student]

top

[ Cambridge University |

CUED |

MIL |

Home]

![[Dept of Engineering]](http://www.eng.cam.ac.uk/images/house_style/engban-s.gif)

![[Dept of Engineering]](http://www.eng.cam.ac.uk/images/house_style/engban-s.gif)